RLC 2025 Schedule

📅 Monday, August 4

2 PM - 6 PM

📅 Tuesday, August 5 (Workshops)

12:30 PM - 2 PM

9 AM - 5 PM

5 PM - 6:30 PM

📅 Wednesday, August 6

8:45 AM - 9 AM

9 AM - 10 AM

10:20 AM - 11:15 AM

Four Parallel Tracks (Click on a track to see the list of talks)

Track 1: RL algorithms

- Burning RED: Unlocking Subtask-Driven Reinforcement Learning and Risk-Awareness in Average-Reward Markov Decision Processes [Poster #1]

- RL\(^3\): Boosting Meta Reinforcement Learning via RL inside RL\(^2\) [Poster #2]

- Fast Adaptation with Behavioral Foundation Models [Poster #3]

- Understanding Learned Representations and Action Collapse in Visual Reinforcement Learning [Poster #4]

- Mitigating Suboptimality of Deterministic Policy Gradients in Complex Q-functions [Poster #5]

- ProtoCRL: Prototype-based Network for Continual Reinforcement Learning [Poster #6]

Track 2: RL from human feedback, Imitation Learning

- Online Intrinsic Rewards for Decision Making Agents from Large Language Model Feedback [Poster #13]

- Nonparametric Policy Improvement in Continuous Action Spaces via Expert Demonstrations [Poster #14]

- DisDP: Robust Imitation Learning via Disentangled Diffusion Policies [Poster #15]

- Mitigating Goal Misgeneralization via Minimax Regret [Poster #16]

- Modelling human exploration with light-weight meta reinforcement learning algorithms [Poster #17]

- Towards Improving Reward Design in RL: A Reward Alignment Metric for RL Practitioners [Poster #18]

Track 3: Hierarchical RL, Planning algorithms

- AVID: Adapting Video Diffusion Models to World Models [Poster #25]

- The Confusing Instance Principle for Online Linear Quadratic Control [Poster #26]

- Long-Horizon Planning with Predictable Skills [Poster #27]

- Optimal discounting for offline input-driven MDP [Poster #28]

- A Timer-Enforced Hybrid Supervisor for Robust, Chatter-Free Policy Switching [Poster #29]

- Focused Skill Discovery: Learning to Control Specific State Variables while Minimizing Side Effects [Poster #30]

Track 4: Evaluation, Benchmarks

- Which Experiences Are Influential for RL Agents? Efficiently Estimating The Influence of Experiences [Poster #37]

- Offline vs. Online Learning in Model-based RL: Lessons for Data Collection Strategies [Poster #38]

- Multi-Task Reinforcement Learning Enables Parameter Scaling [Poster #39]

- Benchmarking Massively Parallelized Multi-Task Reinforcement Learning for Robotics Tasks [Poster #40]

- PufferLib 2.0: Reinforcement Learning at 1M steps/s [Poster #41]

- Uncovering RL Integration in SSL Loss: Objective-Specific Implications for Data-Efficient RL [Poster #42]

30 min break

11:45 AM - 12:30 PM

Track 1: RL algorithms (continued)

- Offline Reinforcement Learning with Domain-Unlabeled Data [Poster #7]

- SPEQ: Offline Stabilization Phases for Efficient Q-Learning in High Update-To-Data Ratio Reinforcement Learning [Poster #8]

- Offline Reinforcement Learning with Wasserstein Regularization via Optimal Transport Maps [Poster #9]

- Zero-Shot Reinforcement Learning Under Partial Observability [Poster #10]

- Adaptive Submodular Policy Optimization [Poster #11]

Track 2: RL from human feedback, Imitation Learning (continued)

- PAC Apprenticeship Learning with Bayesian Active Inverse Reinforcement Learning [Poster #19]

- Offline Action-Free Learning of Ex-BMDPs by Comparing Diverse Datasets [Poster #20]

- One Goal, Many Challenges: Robust Preference Optimization Amid Content-Aware and Multi-Source Noise [Poster #21]

- Goals vs. Rewards: A Comparative Study of Objective Specification Mechanisms [Poster #22]

Track 3: Hierarchical RL, Planning algorithms (continued)

- Representation Learning and Skill Discovery with Empowerment [Poster #31]

- Compositional Instruction Following with Language Models and Reinforcement Learning [Poster #32]

- Composition and Zero-Shot Transfer with Lattice Structures in Reinforcement Learning [Poster #33]

- Double Horizon Model-Based Policy Optimization [Poster #34]

Track 4: Evaluation, Benchmarks (continued)

- Benchmarking Partial Observability in Reinforcement Learning with a Suite of Memory-Improvable Domains [Poster #43]

- How Should We Meta-Learn Reinforcement Learning Algorithms? [Poster #44]

- AdaStop: adaptive statistical testing for sound comparisons of Deep RL agents [Poster #45]

- MixUCB: Enhancing Safe Exploration in Contextual Bandits with Human Oversight [Poster #46]

12:30 PM - 2 PM

2 PM - 3 PM

3 PM - 5:45 PM

6 PM

Event Details

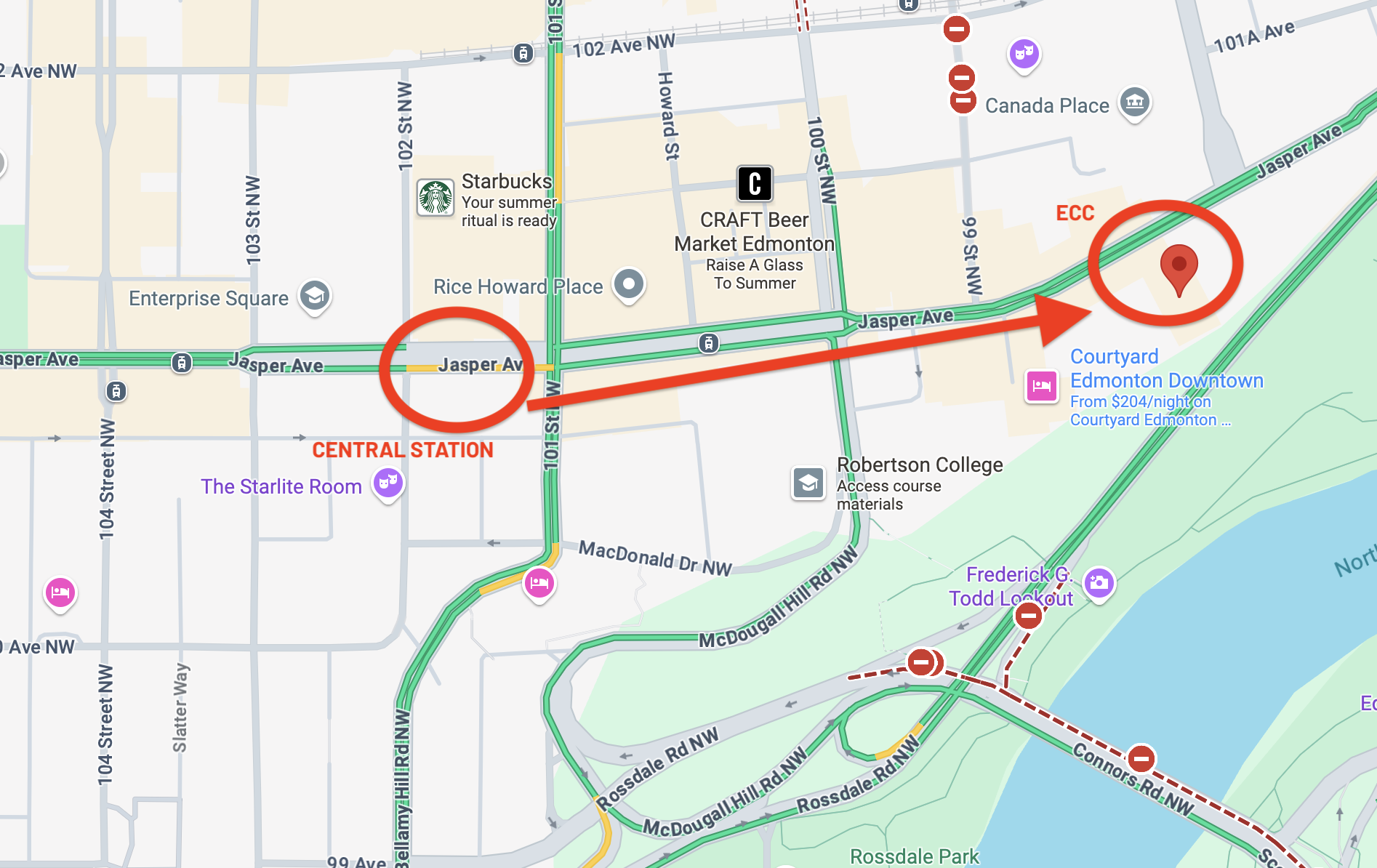

The RLC banquet dinner will be held on Wednesday August 6th (6pm) at Edmonton Convention Centre in Hall D (https://www.edmontonconventioncentre.com/). Doors open at 6pm, with the buffet dinner served at 6:45 pm. Fun entertainment is planned with Improv by RapidFire Theatre and a Puzzle Hunt by Michael Bowling and Michael Littman.

Dress code: Your normal conference attire. Or whatever you feel most comfortable in for a buffet meal and evening of light entertainment and good conversation.

Address and getting there: 9797 Jasper Ave, Edmonton, AB T5J 1N9

Detailed instructions: https://www.edmontonconventioncentre.com/our-location/getting-here/

Public Transit Directions

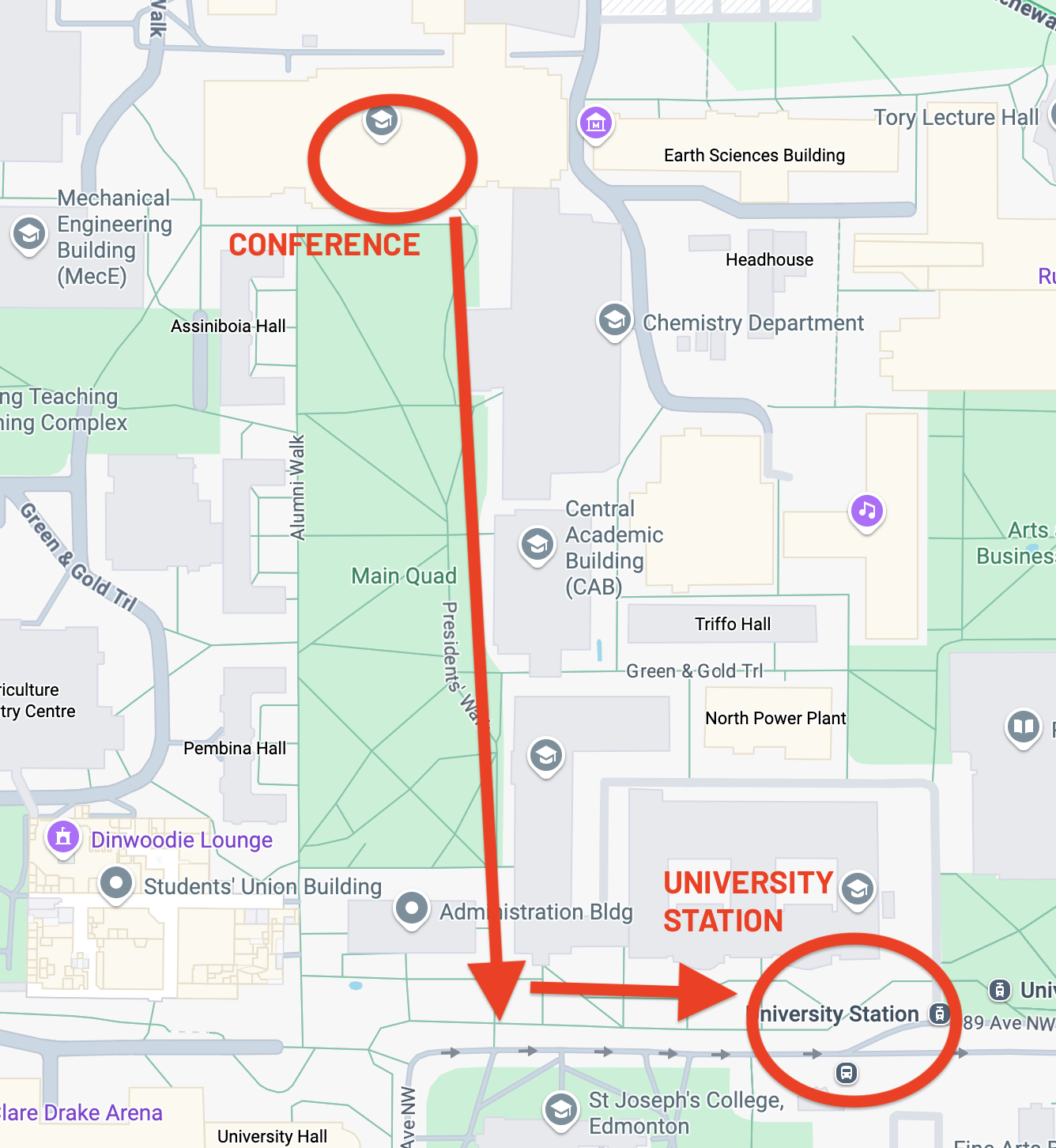

Directions by train from campus:

To get there from University station, take a north-bound train from University station to Central station. From there, head up to street level from the platform, and it is a ~5 minute walk east along Jasper Ave. Maps below.

Walking Directions

Directions by walking from campus:

Walk east from the conference venue to 109st, and then walk north on 109st across the river via the High Level Bridge along the pedestrian/bike trail until reaching Jasper Avenue (or detour through the legislature grounds). Head east to the banquet address along Jasper Avenue. Takes around 1h.

📅 Thursday, August 7

9 AM - 10 AM

10:20 AM - 11:15 AM

Four Parallel Tracks (Click on a track to see the list of talks)

Track 1: Deep RL

- Understanding the Effectiveness of Learning Behavioral Metrics in Deep Reinforcement Learning [Poster #1]

- Impoola: The Power of Average Pooling for Image-based Deep Reinforcement Learning [Poster #2]

- Eau De \(Q\)-Network: Adaptive Distillation of Neural Networks in Deep Reinforcement Learning [Poster #3]

- Disentangling Recognition and Decision Regrets in Image-Based Reinforcement Learning [Poster #4]

- Make the Pertinent Salient: Task-Relevant Reconstruction for Visual Control with Distractions [Poster #5]

- Pretraining Decision Transformers with Reward Prediction for In-Context Multi-task Structured Bandit Learning [Poster #6]

Track 2: Social and economic aspects, Neuroscience and cognitive science

- Pareto Optimal Learning from Preferences with Hidden Context [Poster #13]

- When and Why Hyperbolic Discounting Matters for Reinforcement Learning Interventions [Poster #14]

- Reinforcement Learning from Human Feedback with High-Confidence Safety Guarantees [Poster #15]

- Towards Large Language Models that Benefit for All: Benchmarking Group Fairness in Reward Models [Poster #16]

- Reinforcement Learning for Human-AI Collaboration via Probabilistic Intent Inference [Poster #17]

- High-Confidence Policy Improvement from Human Feedback cognitive science [Poster #18]

Track 3: Exploration

- Uncertainty Prioritized Experience Replay [Poster #25]

- Pure Exploration for Constrained Best Mixed Arm Identification with a Fixed Budget [Poster #26]

- Quantitative Resilience Modeling for Autonomous Cyber Defense [Poster #27]

- Learning to Explore in Diverse Reward Settings via Temporal-Difference-Error Maximization [Poster #28]

- Syllabus: Portable Curricula for Reinforcement Learning Agents [Poster #29]

- Exploration-Free Reinforcement Learning with Linear Function Approximation [Poster #30]

Track 4: Theoretical RL, Bandit algorithms

- A Finite-Time Analysis of Distributed Q-Learning [Poster #37]

- Finite-Time Analysis of Minimax Q-Learning [Poster #38]

- Improved Regret Bound for Safe Reinforcement Learning via Tighter Cost Pessimism and Reward Optimism [Poster #39]

- Non-Stationary Latent Auto-Regressive Bandits [Poster #40]

- A Finite-Sample Analysis of an Actor-Critic Algorithm for Mean-Variance Optimization in a Discounted MDP [Poster #41]

- Leveraging priors on distribution functions for multi-arm bandits [Poster #42]

30 min break

11:45 AM - 12:30 PM

Track 1: Deep RL (continued)

- Sampling from Energy-based Policies using Diffusion [Poster #7]

- Optimistic critics can empower small actors [Poster #8]

- Scalable Real-Time Recurrent Learning Using Columnar-Constructive Networks [Poster #9]

- AGaLiTe: Approximate Gated Linear Transformers for Online Reinforcement Learning [Poster #10]

- Deep Reinforcement Learning with Gradient Eligibility Traces [Poster #11]

Track 2: Social and economic aspects, Neuroscience and (continued)

- Building Sequential Resource Allocation Mechanisms without Payments [Poster #19]

- From Explainability to Interpretability: Interpretable Reinforcement Learning Via Model Explanations [Poster #20]

- Learning Fair Pareto-Optimal Policies in Multi-Objective Reinforcement Learning [Poster #21]

- AI in a vat: Fundamental limits of efficient world modelling for safe agent sandboxing [Poster #22]

Track 3: Exploration (continued)

- Value Bonuses using Ensemble Errors for Exploration in Reinforcement Learning [Poster #31]

- Intrinsically Motivated Discovery of Temporally Abstract Graph-based Models of the World [Poster #32]

- An Optimisation Framework for Unsupervised Environment Design [Poster #33]

- Epistemically-guided forward-backward exploration [Poster #34]

- RLeXplore: Accelerating Research in Intrinsically-Motivated Reinforcement Learning [Poster #35]

Track 4: Theoretical RL, Bandit algorithms (continued)

- Multi-task Representation Learning for Fixed Budget Pure-Exploration in Linear and Bilinear Bandits [Poster #43]

- On Slowly-varying Non-stationary Bandits [Poster #44]

- Empirical Bound Information-Directed Sampling [Poster #45]

- Thompson Sampling for Constrained Bandits [Poster #46]

- Achieving Limited Adaptivity for Multinomial Logistic Bandits [Poster #47]

12:45 PM - 1:45 PM

12:30 PM - 2 PM

2 PM - 3 PM

3 PM - 6 PM

📅 Friday, August 8

8 AM - 9 AM

9 AM - 10 AM

10:20 AM - 11:15 AM

Four Parallel Tracks (Click on a track to see the list of talks)

Track 1: RL algorithms, Deep RL

- Bayesian Meta-Reinforcement Learning with Laplace Variational Recurrent Networks [Poster #1]

- Cascade - A sequential ensemble method for continuous control tasks [Poster #2]

- HANQ: Hypergradients, Asymmetry, and Normalization for Fast and Stable Deep \(Q\)-Learning [Poster #3]

- Rectifying Regression in Reinforcement Learning [Poster #4]

- Efficient Morphology-Aware Policy Transfer to New Embodiments [Poster #5]

- Finer Behavioral Foundation Models via Auto-Regressive Features and Advantage Weighting [Poster #6]

Track 2: Applied RL

- Action Mapping for Reinforcement Learning in Continuous Environments with Constraints [Poster #13]

- Chargax: A JAX Accelerated EV Charging Simulator [Poster #14]

- WOFOSTGym: A Crop Simulator for Learning Annual and Perennial Crop Management Strategies [Poster #15]

- Drive Fast, Learn Faster: On-Board RL for High Performance Autonomous Racing [Poster #16]

- Multi-Agent Reinforcement Learning for Inverse Design in Photonic Integrated Circuits [Poster #17]

- Gaussian Process Q-Learning for Finite-Horizon Markov Decision Process [Poster #18]

Track 3: Multi-agent RL

- Reinforcement Learning for Finite Space Mean-Field Type Game [Poster #25]

- Collaboration Promotes Group Resilience in Multi-Agent RL [Poster #26]

- Foundation Model Self-Play: Open-Ended Strategy Innovation via Foundation Models [Poster #27]

- Hierarchical Multi-agent Reinforcement Learning for Cyber Network Defense [Poster #28]

- Efficient Information Sharing for Training Decentralized Multi-Agent World Models [Poster #29]

- Adaptive Reward Sharing to Enhance Learning in the Context of Multiagent Teams [Poster #30]

Track 4: Foundations

- Effect of a slowdown correlated to the current state of the environment on an asynchronous learning architecture [Poster #37]

- Average-Reward Soft Actor-Critic [Poster #38]

- Your Learned Constraint is Secretly a Backward Reachable Tube [Poster #39]

- Recursive Reward Aggregation [Poster #40]

30 min break

11:45 AM - 12:30 PM

Track 1: RL algorithms, Deep RL (continued)

- Concept-Based Off-Policy Evaluation [Poster #7]

- Multiple-Frequencies Population-Based Training [Poster #8]

- AVG-DICE: Stationary Distribution Correction by Regression [Poster #9]

- Iterated Q-Network: Beyond One-Step Bellman Updates in Deep Reinforcement Learning [Poster #10]

Track 2: Applied RL (continued)

- Hybrid Classical/RL Local Planner for Ground Robot Navigation [Poster #19]

- V-Max: Making RL Practical for Autonomous Driving [Poster #20]

- Shaping Laser Pulses with Reinforcement Learning [Poster #21]

- Learning Sub-Second Routing Optimization in Computer Networks requires Packet-Level Dynamics [Poster #22]

Track 3: Multi-agent RL (continued)

- Seldonian Reinforcement Learning for Ad Hoc Teamwork [Poster #31]

- Joint-Local Grounded Action Transformation for Sim-to-Real Transfer in Multi-Agent Traffic Control [Poster #32]

- TransAM: Transformer-Based Agent Modeling for Multi-Agent Systems via Local Trajectory Encoding [Poster #33]

- PEnGUiN: Partially Equivariant Graph NeUral Networks for Sample Efficient MARL [Poster #34]

- Human-Level Competitive Pokémon via Scalable Offline Reinforcement Learning with Transformers [Poster #35]

Track 4: Foundations (continued)

- Investigating the Utility of Mirror Descent in Off-policy Actor-Critic [Poster #41]

- Rethinking the Foundations for Continual Reinforcement Learning [Poster #42]

- An Analysis of Action-Value Temporal-Difference Methods That Learn State Values [Poster #43]

- Reinforcement Learning with Adaptive Temporal Discounting [Poster #44]

12:30 PM - 2 PM

2:00 PM - 3:00 PM

3 PM - 6 PM

📅 Saturday, August 9

9 AM - 10 AM

10 AM - 11 AM

11 AM - 1 PM

Social Events

RLBReW After Dark

Come join us to discuss wacky RL ideas over food and drinks (sadly not sponsored), find collaborators and friends!

📅 When

7pm, 5th August